Posted On Apr 29 2024 | 16:18

The rapid advancement of technology has brought both incredible opportunities and new challenges, in this digital age. One such challenge is the rise of deepfakes, a term coined to describe highly realistic fake videos and voiceovers created through generative AI. Deepfakes have gained significant attention due to their potential to deceive and manipulate, threatening individuals, businesses, and society.

Deepfakes are created using generative AI algorithms that can generate highly realistic images, videos, and audio that appear authentic. These algorithms analyze vast amounts of data to learn and mimic the patterns and characteristics of a target individual, enabling the creation of convincing imitations. This technology, while impressive, has resulted in a web of ethical and security concerns.

Understanding Generative AI and Its Role in Creating Deepfakes

Generative AI refers to the branch of artificial intelligence that focuses on creating new and original content. It utilizes algorithms capable of learning patterns and generating content that closely mimics the input data. In the context of deepfakes, generative AI algorithms analyze extensive datasets containing images, videos, and audio recordings of a specific individual to create realistic imitations. One such example is the video of Barack Obama.

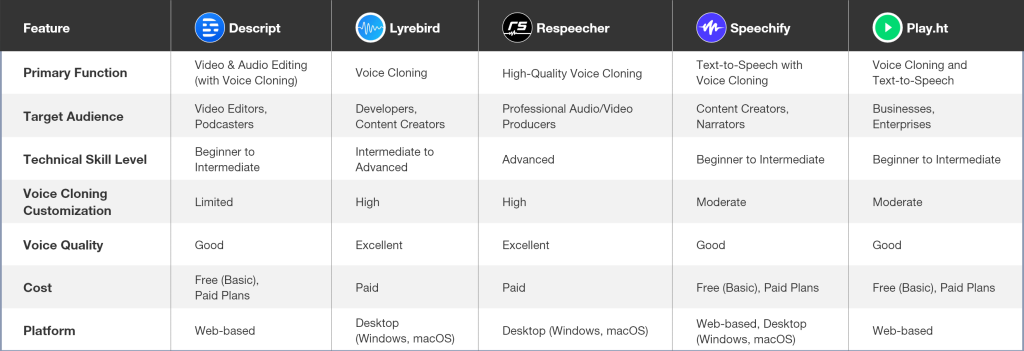

Deepfakes exploit the power of generative AI by combining facial recognition, image processing, and voice synthesis technologies. These algorithms analyze facial movements, expressions, and vocal patterns to create highly convincing imitations of the target individual. As generative AI continues to evolve, the quality of deepfakes has improved significantly, making it increasingly difficult to distinguish between real and fake content. Some tool used to create the deepfakes and voice cloning are:

Deepfake Tools:

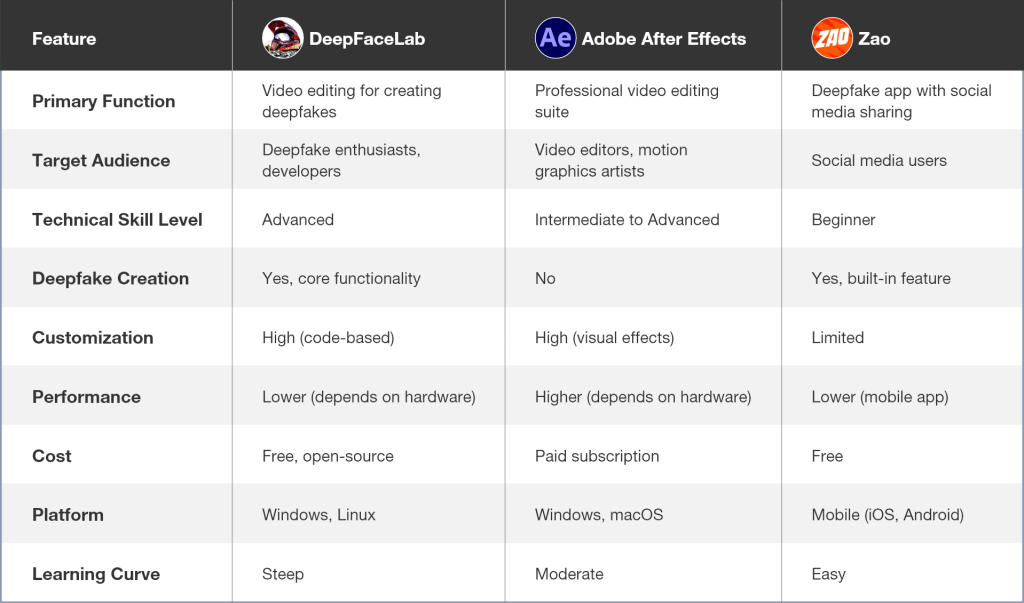

Deepfake video generation tools are advancing at a rapid pace, enabling users to manipulate videos by superimposing one person’s face onto another person’s body. These tools vary from user-friendly applications designed for social media entertainment to sophisticated software capable of producing remarkably lifelike content. As the accessibility of these tools continues to expand, it becomes crucial to acknowledge both the potential benefits and risks associated with deepfakes.

The proliferation of deepfakes poses severe dangers in the age of cyberthreats. Deepfakes can be utilized for various malicious purposes, including identity theft, defamation, election interference, and financial scams. The potential consequences of such misuse are far-reaching and can lead to reputational damage, financial loss, or even political instability.

One of the most significant risks associated with deepfakes is their potential to manipulate public opinion and sow discord. With the ability to create convincing videos of public figures saying or doing things they never did, deepfakes can be used to spread misinformation and incite social unrest. In a world where trust and truth are already fragile, the emergence of deepfakes amplifies the challenges faced by individuals and society.

The Impact of Deepfakes on Cybersecurity and Cybercrime

Deepfakes have a profound impact on both cybersecurity and cybercrime. Traditional methods of authentication and verification are rendered inadequate in this technology. Deepfakes can be used to bypass biometric security systems, fool facial recognition software, and deceive voice authentication systems. This poses a significant threat to individuals and organizations relying on these technologies for secure access control.

Furthermore, deepfakes can be used as a tool for cybercriminals to perpetrate fraud and gain unauthorized access to sensitive information. By impersonating trusted individuals, cybercriminals can manipulate victims into revealing confidential data or performing malicious actions. The implications of such attacks extend beyond financial loss, as they can compromise national security, intellectual property, and personal privacy.

How NVIDIA is regulating to Combat Deepfakes

Nvidia is developing a method to embed watermarks into content generated by Generative AI models. These watermarks would be imperceptible to the human eye but detectable by AI systems. This technology aims to:

Improve content verification: By identifying watermarks, AI systems can verify the authenticity of content and flag deepfakes.

Maintain transparency: Watermarks can indicate that content has been created using AI, allowing viewers to be aware of its artificial origin.

Deterring misuse: The possibility of embedded watermarks might discourage bad actors from creating malicious deepfakes.

As the threat of deepfakes continues to grow, researchers and technologists are working on innovative solutions to detect and combat them. Advanced algorithms and machine learning models are being developed to analyze videos and audio recordings for signs of manipulation. These algorithms can identify inconsistencies in facial movements, audio characteristics, and other subtle cues that distinguish deepfakes from genuine content.

Additionally, the development of blockchain technology shows promise in combating deepfakes. By leveraging the decentralized and immutable nature of blockchain, it becomes possible to create an indelible record of the creation and distribution of digital content. This can enhance accountability and enable the verification of authentic content, mitigating the spread of deepfakes.

Wrapping up

In conclusion, the rise of deepfakes poses significant challenges to individuals, businesses, and society. The power of generative AI algorithms to create highly realistic imitations calls for urgent action in regulating their development and use. Combating deepfakes requires a multi-faceted approach, involving technological advancements, regulatory frameworks, and education.

Stay informed and stay vigilant. Educate yourself about deepfakes and their implications. Talk to our experts and know how People Tech Groups will help clients do POCs on implementing Nvidia’s future solution, add intermediary solutions, or implement legit deep-fake application to generate video content for training or entertainment. Together, we can protect ourselves and uphold the integrity of digital content.