Posted On Apr 04 2024 | 11:36

The incredible advancements in AI are opening a whole new world of possibilities, but they are also a complex issue. On the one hand, AI developers have enabled very rapid adoption by providing the most basic consumer user interface known for AI chatbots (such as Bard and ChatGPT): a text box with prompts to ask the machine questions. However, from a cybersecurity standpoint, the growing use of these generative AI tools has brought about a concerning increase in social engineering risks.

The AI revolution is unlocking a world of possibilities, but it is not without its challenges. AI chatbots like Bard and ChatGPT have made huge strides in user-friendliness, using uncomplicated text boxes and prompts. However, this convenience comes with a downside: an increase in social engineering risks. As these AI tools become more popular, cybersecurity concerns are growing.

Attackers are well-versed in phishing attacks. AI is aiding attackers with advanced phishing techniques. It is high time for enterprises to incorporate phishing simulations, phishing simulation tools, and phishing protection solutions to safeguard themselves from AI-enhanced social engineering attacks.

Social engineering involves tricking, swaying, or manipulating individuals to take over a computer system. Explore social engineering further in our previous: https://peopletech.com/blogs/dont-be-a-victim-learn-about-social-engineering-tricks-like-phishing-vishing-smishing-discover-smart-tips-to-protect-yourself-your-data/

According to the 16th annual Data Breach Investigations Report (DBIR), the human element is involved in over three-quarters (74%) of breaches this year, with social engineering playing a significant role in those breaches.

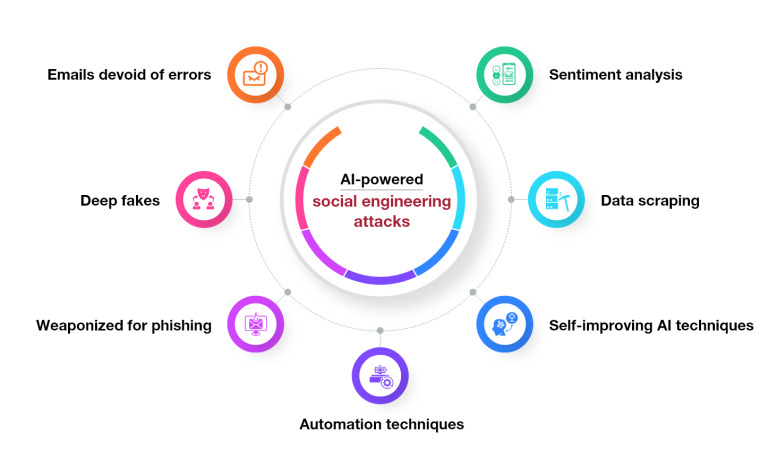

Phishers, who extensively engage in phishing attacks, along with social engineers and threat actors, are entrepreneurs. Therefore, they readily adopt any new strategy that offers them an advantage. Adversaries can utilize AI to create sophisticated social engineering attacks in several ways.

1. Emails devoid of errors: Typical phishing attempts often include numerous grammatical errors because the culprits are often not fluent in the language of their target audience. Attackers can create incredibly error-free emails with proper grammar and spelling correction using AI technologies like ChatGPT, making them look like a human wrote them. So that basic phishing simulation tools would fail to recognize them.

2. Deep fakes: Artificial intelligence (AI) can help produce convincingly realistic deep fakes, such as synthetic films and virtual personas. Explore our deep fakes blogs for more information. Series 1, Series 2 and Series 3. Scammers can pose as actual people—a senior executive, a client, a partner, etc.—engage victims in dialogue and use social engineering to trick them into divulging confidential information, making purchases, or disseminating false information. It will be difficult for basic phishing simulations to identify and evade them.

3. Weaponized for phishing: Cybercriminals can perform sophisticated voice phishing (or “vishing”) assaults by emulating human speech and audio. The Federal Trade Commission has cautioned about a scam involving impostors who pretend to be family members, using AI voice cloning technology to deceive victims into sending money by falsely claiming a family emergency.

4. Automation techniques: AI tools have the potential to be used as phishing protection solutions. For instance, researchers were able to effectively trick a Bing chatbot into pretending to be a Microsoft employee by employing a sophisticated technique known as Indirect Prompt Injection. The chatbot then generated phishing messages that asked users for their credit card information.

5. Self-improving AI techniques: Threat actors can conduct highly focused social engineering/phishing attacks on an industrial scale by utilizing intelligent scripting, autonomous agents, and other automation tools.

6. Data scraping: AI can adapt based on its knowledge (differentiating between effective and ineffective strategies) and refine its sophisticated phishing techniques to determine the most effective approach. Automated information extraction from websites is known as data scraping. Using specialized tools, scammers gather information such as text, photos, and connections. They also use sophisticated AI algorithms to examine the scraped data, looking for trends and confidential information. Scammers use this wealth of information to construct messages tailored to them, making it more difficult to spot frauds.

7. Sentiment analysis: is an AI method for social media profiling that finds subtle emotional overtones in text. Using sophisticated AI algorithms, they delve into people’s social media profiles, uncovering insights into their lives, interests, and relationships. This enables scammers to construct detailed profiles, intertwining common interests, daily activities, and shared connections. Despite never having met, scammers can create a sense of familiarity, making it easier to gain their victims’ trust and execute their deceitful schemes more effectively.

1. User Training: Regular training, phishing tests, and simulation exercises can help users recognize and report social engineering attempts. Organizations that invest in comprehensive security training see a significant decrease in their phish-prone percentage.

2. AI-Based Security Controls: Implementing AI-powered security tools. People Tech’s powerful AI-powered security tools can help detect and respond to advanced social engineering attacks. AI can analyze messages and URLs for signs of phishing and assist in incident response by isolating infected devices and notifying administrators.

3. Strong Authentication: Multi-factor authentication (MFA) is essential, but choosing phishing-resistant MFA solutions is critical. This provides an additional level of security, increasing the difficulty for attackers to breach accounts even with stolen credentials.

In conclusion, a comprehensive strategy must be employed to prevent the above-mentioned AI advanced Social Engineering attacks. This involves increasing awareness, offering educational resources, and implementing phishing simulations, phishing simulation tools, and phishing protection solutions. People Tech is dedicated to integrating effective strategies and approaches to decrease these vulnerabilities. This involves responding appropriately and offering ongoing vigilant surveillance and protection assistance. It is essential to recognize that no single solution can guarantee complete protection against phishing risks; therefore, we promote a comprehensive approach that utilizes technology, education, and user awareness to significantly reduce the chances of becoming victims of social engineering attacks.

Connect with us today! to explore how our solutions can protect your enterprise from the growing risks associated with generative AI. Let us discuss your needs and find the right strategy for you.